目录

- 环境准备

- Docker

- 搭建Swarm集群

- 打开防火墙(Swarm需要)

- 创建Swarm

- 加入Swarm

- 服务约束

- 单机集群

- 创建容器

- 启动容器

- 进入容器启动集群

- 分布式集群

- 部署

- docker compose.yml

- wait-for-it.sh

- redis-start.sh

- 启动

- 目录结构

- 撤销部署

- 测试

- 问题

- 脚本下载+快速启动

之前尝试用swarm部署redis集群时网上看了很多帖子,发现大多数都是单机集群,也就是在一个服务器上启多个redis容器,然后进入其中一个容器执行redis搭建,经过研究,我实现了只需要通过docker-compose.yml文件和一个启动命令就完成redis分布式部署的方式,让其分别部署在不同机器上,并实现集群搭建。

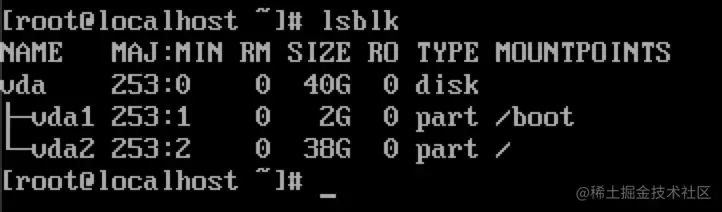

环境准备

四台虚拟机

- 192.168.2.38(管理节点)

- 192.168.2.81(工作节点)

- 192.168.2.100(工作节点)

- 192.168.2.102(工作节点)

时间同步

每台机器都执行

yum install -y ntp

cat <<EOF>>/var/spool/cron/root

00 12 * * * /usr/sbin/ntpdate -u ntp1.aliyun.com && /usr/sbin/hwclock -w

EOF

##查看计划任务

crontab -l

##手动执行

/usr/sbin/ntpdate -u ntp1.aliyun.com && /usr/sbin/hwclock -w

Docker

安装Docker

curl -sSL https://get.daocloud.io/docker | sh

启动docker

sudo systemctl start docker

搭建Swarm集群

打开防火墙(Swarm需要)

管理节点打开2377

# manager

firewall-cmd –zone=public –add-port=2377/tcp –permanent

所有节点打开以下端口

# 所有node

firewall-cmd –zone=public –add-port=7946/tcp –permanent

firewall-cmd –zone=public –add-port=7946/udp –permanent

firewall-cmd –zone=public –add-port=4789/tcp –permanent

firewall-cmd –zone=public –add-port=4789/udp –permanent

所有节点重启防火墙

# 所有node

firewall-cmd –reload

systemctl restart docker

图个方便可以直接关闭防火墙

创建Swarm

docker swarm init –advertise-addr your_manager_ip

查看join-token

[root@manager ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join –token SWMTKN-1-51b7t8whxn8j6mdjt5perjmec9u8qguxq8tern9nill737pra2-ejc5nw5f90oz6xldcbmrl2ztu 192.168.2.61:2377

[root@manager ~]#

加入Swarm

docker swarm join –token SWMTKN-1-

51b7t8whxn8j6mdjt5perjmec9u8qguxq8tern9nill737pra2-ejc5nw5f90oz6xldcbmrl2ztu

192.168.2.38:2377

#查看节点

docker node ls

服务约束

添加label

sudo docker node update –label-add redis1=true 管理节点名称

sudo docker node update –label-add redis2=true 工作节点名称

sudo docker node update –label-add redis3=true 工作节点名称

sudo docker node update –label-add redis4=true 工作节点名称

单机集群

弊端:容器都部署在一个机器上,机器挂了,就全挂了。

创建容器

Tips:这里可以写个脚本启动,因为这种方式不常用,这里就不写那个脚本了

docker create –name redis-node1 –net host -v /data/redis-data/node1:/data redis –cluster-enabled yes –cluster-config-file nodes-node-1.conf –port 6379

docker create –name redis-node2 –net host -v /data/redis-data/node2:/data redis –cluster-enabled yes –cluster-config-file nodes-node-2.conf –port 6380

docker create –name redis-node3 –net host -v /data/redis-data/node3:/data redis –cluster-enabled yes –cluster-config-file nodes-node-3.conf –port 6381

docker create –name redis-node4 –net host -v /data/redis-data/node4:/data redis –cluster-enabled yes –cluster-config-file nodes-node-4.conf –port 6382

docker create –name redis-node5 –net host -v /data/redis-data/node5:/data redis –cluster-enabled yes –cluster-config-file nodes-node-5.conf –port 6383

docker create –name redis-node6 –net host -v /data/redis-data/node6:/data redis –cluster-enabled yes –cluster-config-file nodes-node-6.conf –port 6384

启动容器

docker start redis-node1 redis-node2 redis-node3 redis-node4 redis-node5 redis-node6

进入容器启动集群

# 进入其中一个节点

docker exec -it redis-node1 /bin/bash

# 创建集群

redis-cli –cluster create 192.168.2.38:6379 192.168.2.38:6380 192.168.2.38:6381 192.168.2.38:6382 192.168.2.38:6383 192.168.2.38:6384 –cluster-replicas 1

# –cluster-replicas 1 一比一,一主一从

分布式集群

redis集群至少需要3个主节点,所以这里搭建三主三从的集群,由于只有4台机器,所以在脚本中把前三个节点放到一台机器上了。

部署

在swarm集群的Manager节点中创建

mkdir /root/redis-swarm

cd /root/redis-swarm

vi docker-compose.yml

docker compose.yml

说明:

- 前6个服务为redis节点,最后一个redis-start是用于创建集群,利用redis-cli客户端搭建集群,该服务搭建完redis集群后会自动停止运行。

- redis-start需要等待前6个redis节点的执行完毕才能创建集群,因此需要用到脚本wait-for-it.sh

- 由于redis-cli –cluster create不支持网络别名,所以另写脚本redis-start.sh

使用这套脚本同样可以单机部署集群,只需要在启动时不使用swarm启动就可以了,然后把docker-compose.yml中的网络模式driver: overlay给注释掉即可

version: \’3.7\’

services:

redis-node1:

image: redis

hostname: redis-node1

ports:

– 6379:6379

networks:

– redis-swarm

volumes:

– \”node1:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-1.conf

deploy:

mode: replicated

replicas: 1

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

placement:

constraints:

– node.role==manager

redis-node2:

image: redis

hostname: redis-node2

ports:

– 6380:6379

networks:

– redis-swarm

volumes:

– \”node2:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-2.conf

deploy:

mode: replicated

replicas: 1

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

placement:

constraints:

– node.role==manager

redis-node3:

image: redis

hostname: redis-node3

ports:

– 6381:6379

networks:

– redis-swarm

volumes:

– \”node3:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-3.conf

deploy:

mode: replicated

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

replicas: 1

placement:

constraints:

– node.role==manager

redis-node4:

image: redis

hostname: redis-node4

ports:

– 6382:6379

networks:

– redis-swarm

volumes:

– \”node4:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-4.conf

deploy:

mode: replicated

replicas: 1

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

placement:

constraints:

– node.labels.redis2==true

redis-node5:

image: redis

hostname: redis-node5

ports:

– 6383:6379

networks:

– redis-swarm

volumes:

– \”node5:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-5.conf

deploy:

mode: replicated

replicas: 1

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

placement:

constraints:

– node.labels.redis3==true

redis-node6:

image: redis

hostname: redis-node6

ports:

– 6384:6379

networks:

– redis-swarm

volumes:

– \”node6:/data\”

command: redis-server –cluster-enabled yes –cluster-config-file nodes-node-6.conf

deploy:

mode: replicated

replicas: 1

resources:

limits:

# cpus: \’0.001\’

memory: 5120M

reservations:

# cpus: \’0.001\’

memory: 512M

placement:

constraints:

– node.labels.redis4==true

redis-start:

image: redis

hostname: redis-start

networks:

– redis-swarm

volumes:

– \”$PWD/start:/redis-start\”

depends_on:

– redis-node1

– redis-node2

– redis-node3

– redis-node4

– redis-node5

– redis-node6

command: /bin/bash -c \”chmod 777 /redis-start/redis-start.sh && chmod 777 /redis-start/wait-for-it.sh && /redis-start/redis-start.sh\”

deploy:

restart_policy:

condition: on-failure

delay: 5s

max_attempts: 5

placement:

constraints:

– node.role==manager

networks:

redis-swarm:

driver: overlay

volumes:

node1:

node2:

node3:

node4:

node5:

node6:

wait-for-it.sh

mkdir /root/redis-swarm/start

vi wait-for-it.sh

vi redis-start.sh

#!/usr/bin/env bash

# Use this script to test if a given TCP host/port are available

cmdname=$(basename $0)

echoerr() { if [[ $QUIET -ne 1 ]]; then echo \”$@\” 1>&2; fi }

usage()

{

cat << USAGE >&2

Usage:

$cmdname host:port [-s] [-t timeout] [– command args]

-h HOST | –host=HOST Host or IP under test

-p PORT | –port=PORT TCP port under test

Alternatively, you specify the host and port as host:port

-s | –strict Only execute subcommand if the test succeeds

-q | –quiet Don\’t output any status messages

-t TIMEOUT | –timeout=TIMEOUT

Timeout in seconds, zero for no timeout

— COMMAND ARGS Execute command with args after the test finishes

USAGE

exit 1

}

wait_for()

{

if [[ $TIMEOUT -gt 0 ]]; then

echoerr \”$cmdname: waiting $TIMEOUT seconds for $HOST:$PORT\”

else

echoerr \”$cmdname: waiting for $HOST:$PORT without a timeout\”

fi

start_ts=$(date +%s)

while :

do

(echo > /dev/tcp/$HOST/$PORT) >/dev/null 2>&1

result=$?

if [[ $result -eq 0 ]]; then

end_ts=$(date +%s)

echoerr \”$cmdname: $HOST:$PORT is available after $((end_ts – start_ts)) seconds\”

break

fi

sleep 1

done

return $result

}

wait_for_wrapper()

{

# In order to support SIGINT during timeout: http://unix.stackexchange.com/a/57692

if [[ $QUIET -eq 1 ]]; then

timeout $TIMEOUT $0 –quiet –child –host=$HOST –port=$PORT –timeout=$TIMEOUT &

else

timeout $TIMEOUT $0 –child –host=$HOST –port=$PORT –timeout=$TIMEOUT &

fi

PID=$!

trap \”kill -INT -$PID\” INT

wait $PID

RESULT=$?

if [[ $RESULT -ne 0 ]]; then

echoerr \”$cmdname: timeout occurred after waiting $TIMEOUT seconds for $HOST:$PORT\”

fi

return $RESULT

}

# process arguments

while [[ $# -gt 0 ]]

do

case \”$1\” in

*:* )

hostport=(${1//:/ })

HOST=${hostport[0]}

PORT=${hostport[1]}

shift 1

;;

–child)

CHILD=1

shift 1

;;

-q | –quiet)

QUIET=1

shift 1

;;

-s | –strict)

STRICT=1

shift 1

;;

-h)

HOST=\”$2\”

if [[ $HOST == \”\” ]]; then break; fi

shift 2

;;

–host=*)

HOST=\”${1#*=}\”

shift 1

;;

-p)

PORT=\”$2\”

if [[ $PORT == \”\” ]]; then break; fi

shift 2

;;

–port=*)

PORT=\”${1#*=}\”

shift 1

;;

-t)

TIMEOUT=\”$2\”

if [[ $TIMEOUT == \”\” ]]; then break; fi

shift 2

;;

–timeout=*)

TIMEOUT=\”${1#*=}\”

shift 1

;;

–)

shift

CLI=\”$@\”

break

;;

–help)

usage

;;

*)

echoerr \”Unknown argument: $1\”

usage

;;

esac

done

if [[ \”$HOST\” == \”\” || \”$PORT\” == \”\” ]]; then

echoerr \”Error: you need to provide a host and port to test.\”

usage

fi

TIMEOUT=${TIMEOUT:-15}

STRICT=${STRICT:-0}

CHILD=${CHILD:-0}

QUIET=${QUIET:-0}

if [[ $CHILD -gt 0 ]]; then

wait_for

RESULT=$?

exit $RESULT

else

if [[ $TIMEOUT -gt 0 ]]; then

wait_for_wrapper

RESULT=$?

else

wait_for

RESULT=$?

fi

fi

if [[ $CLI != \”\” ]]; then

if [[ $RESULT -ne 0 && $STRICT -eq 1 ]]; then

echoerr \”$cmdname: strict mode, refusing to execute subprocess\”

exit $RESULT

fi

exec $CLI

else

exit $RESULT

fi

redis-start.sh

getent hosts xxx查看主机中/etc/hosts域名映射的IP

cd /redis-start/

bash wait-for-it.sh redis-node1:6379 –timeout=0

bash wait-for-it.sh redis-node2:6379 –timeout=0

bash wait-for-it.sh redis-node3:6379 –timeout=0

bash wait-for-it.sh redis-node4:6379 –timeout=0

bash wait-for-it.sh redis-node5:6379 –timeout=0

bash wait-for-it.sh redis-node6:6379 –timeout=0

echo \’redis-cluster begin\’

echo \’yes\’ | redis-cli –cluster create –cluster-replicas 1 \\

`getent hosts redis-node1 | awk \'{ print $1 \”:6379\” }\’` \\

`getent hosts redis-node2 | awk \'{ print $1 \”:6379\” }\’` \\

`getent hosts redis-node3 | awk \'{ print $1 \”:6379\” }\’` \\

`getent hosts redis-node4 | awk \'{ print $1 \”:6379\” }\’` \\

`getent hosts redis-node5 | awk \'{ print $1 \”:6379\” }\’` \\

`getent hosts redis-node6 | awk \'{ print $1 \”:6379\” }\’`

echo \’redis-cluster end\’

启动

目录结构

├── docker-compose.yml

└── start

├── redis-start.sh

└── wait-for-it.sh

swarm管理节点执行

cd /root/redis-swarm

docker stack deploy -c docker-compose.yml redis_cluster

查看redis-start服务日志,如下即为启动成功

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node1:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node1:6379 is available after 18 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node2:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node2:6379 is available after 13 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node3:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node3:6379 is available after 0 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node4:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node4:6379 is available after 0 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node5:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node5:6379 is available after 0 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: waiting for redis-node6:6379 without a timeout

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | wait-for-it.sh: redis-node6:6379 is available after 0 seconds

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | redis-cluster begin

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Performing hash slots allocation on 12 nodes…

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[0] -> Slots 0 – 2730

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[1] -> Slots 2731 – 5460

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[2] -> Slots 5461 – 8191

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[3] -> Slots 8192 – 10922

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[4] -> Slots 10923 – 13652

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Master[5] -> Slots 13653 – 16383

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.6:6379 to 10.0.5.17:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.9:6379 to 10.0.5.16:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.8:6379 to 10.0.5.18:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.12:6379 to 10.0.5.19:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.11:6379 to 10.0.5.3:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Adding replica 10.0.5.5:6379 to 10.0.5.2:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1 10.0.5.17:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[0-2730] (2731 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1 10.0.5.16:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[2731-5460] (2730 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: ea9b45ec64c08c17283239f8b8e5405b2d182428 10.0.5.18:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[5461-8191] (2731 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: ea9b45ec64c08c17283239f8b8e5405b2d182428 10.0.5.19:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[8192-10922] (2731 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 935c177308232de05b5483776478020de51bc578 10.0.5.3:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[10923-13652] (2730 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 935c177308232de05b5483776478020de51bc578 10.0.5.2:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[13653-16383] (2731 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 1c99e42bcfb28a9fe72952d4e4cc5cd88aded0f9 10.0.5.5:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 935c177308232de05b5483776478020de51bc578

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 1c99e42bcfb28a9fe72952d4e4cc5cd88aded0f9 10.0.5.6:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 73cf232f232e83126f058cc01458df11146d8537 10.0.5.9:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 73cf232f232e83126f058cc01458df11146d8537 10.0.5.8:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates ea9b45ec64c08c17283239f8b8e5405b2d182428

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: ca3c50899d6deb04e296c542cd485791fb3e8922 10.0.5.12:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates ea9b45ec64c08c17283239f8b8e5405b2d182428

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: ca3c50899d6deb04e296c542cd485791fb3e8922 10.0.5.11:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 935c177308232de05b5483776478020de51bc578

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Can I set the above configuration? (type \’yes\’ to accept): >>> Nodes configuration updated

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Assign a different config epoch to each node

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Sending CLUSTER MEET messages to join the cluster

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | Waiting for the cluster to join

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | .

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Performing Cluster Check (using node 10.0.5.17:6379)

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1 10.0.5.17:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[0-5460] (5461 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | 1 additional replica(s)

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: ca3c50899d6deb04e296c542cd485791fb3e8922 10.0.5.12:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots: (0 slots) slave

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 935c177308232de05b5483776478020de51bc578

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: ea9b45ec64c08c17283239f8b8e5405b2d182428 10.0.5.19:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[5461-10922] (5462 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | 1 additional replica(s)

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | M: 935c177308232de05b5483776478020de51bc578 10.0.5.3:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots:[10923-16383] (5461 slots) master

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | 1 additional replica(s)

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 1c99e42bcfb28a9fe72952d4e4cc5cd88aded0f9 10.0.5.6:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots: (0 slots) slave

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates 6ce90be6daabc0c700471d03deb3c6bd88c9f0e1

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | S: 73cf232f232e83126f058cc01458df11146d8537 10.0.5.9:6379

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | slots: (0 slots) slave

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | replicates ea9b45ec64c08c17283239f8b8e5405b2d182428

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | [OK] All nodes agree about slots configuration.

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Check for open slots…

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | >>> Check slots coverage…

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | [OK] All 16384 slots covered.

redis-swarm_redis-start.1.6xawjqf5shfw@hyx-test3 | redis-cluster end

撤销部署

docker stack rm redis_cluster

如果需要重新部署集群,redis集群为了保证数据统一,需要清除数据卷。

# 每个节点都需要执行

docker volume prune

测试

进入其中一个节点容器,依次查看集群信息

docker exec -it xxx bash

redis-cli -c -h redis-node1 info

测试读写数据

测试其中一个主节点宕机,这里删除了主节点1,节点1对应的从节点是节点4,节点1宕机后节点4成为主节点

docker service rm redis-swarm_redis-node1

# 查看

root@redis-node2:/data# redis-cli -c -h redis-node1

Could not connect to Redis at redis-node1:6379: Name or service not known

not connected>

root@redis-node2:/data# redis-cli -c -h redis-node4

redis-node4:6379> info

问题

redis-cli –cluster create redis-node1:6379 …省略

在容器中使用redis-cli创建集群时,无法使用容器名创建,只能使用容器的ip,因为redis-cli对别名不支持

脚本下载+快速启动

链接: https://pan.baidu.com/s/18_YS9ng29e31Az_HBzBC1w?pwd=sp8w

提取码: sp8w

到此这篇关于dockerswarm快速部署redis分布式集群的详细过程的文章就介绍到这了,更多相关dockerswarm部署redis内容请搜索悠久资源网以前的文章或继续浏览下面的相关文章希望大家以后多多支持悠久资源网!